What is an LLM knowledge base?

An LLM knowledge base is a sophisticated information repository that leverages the power of Large Language Models (LLMs). These models, built on advanced AI and machine learning technologies, enable the knowledge base to understand, process, and generate human-like text. Unlike traditional knowledge bases, which rely heavily on manual updates and structured data, LLM-powered systems can dynamically ingest and interpret vast amounts of unstructured data.

LLM knowledge base vs traditional knowledge base

Traditional knowledge bases are often limited by their reliance on pre-defined schemas and manual data entry, which can be time-consuming and error-prone. In contrast, a knowledge base powered by an LLM can automatically process and contextualize information from diverse sources. This means that whether the data comes from internal documents, emails, or external publications, an LLM knowledge base can make sense of it and provide accurate, contextually relevant responses.

How do LLM-powered knowledge bases work?

LLM knowledge bases utilize natural language processing (NLP) capabilities to analyze and understand text. Here’s a simplified breakdown of how they work:

- Data ingestion: The LLM ingests data from various sources, including documents, databases, emails, and web content.

- Processing and understanding: Using NLP, the model processes this information to understand context, semantics, and relationships between different data points.

- Knowledge representation: The processed information is structured into a knowledge graph or another form of representation that makes it easy to query and retrieve.

- Question answering and text generation: When a user queries the knowledge base, the LLM uses its understanding of the data to generate accurate, contextually appropriate responses, often using advanced techniques like Retrieval Augmented Generation (RAG).

Retrieval augmented generation (RAG) and RAG architecture

One of the key technologies underpinning LLM knowledge bases is retrieval augmented generation (RAG). This approach combines the power of retrieval-based methods and generative models to enhance the quality of responses. RAG architecture integrates two main components:

- Retriever: This component searches the knowledge base for relevant documents using techniques like similarity search and semantic search. The aim is to find the most pertinent pieces of information that can aid in answering the query.

- Generator: This component uses the retrieved documents as context to generate a coherent and contextually accurate response.

By leveraging RAG, LLM knowledge bases can provide more precise and contextually rich answers, making them highly effective for real-world applications.

What are the benefits of an LLM-powered knowledge base?

Deploying an LLM-powered knowledge base in your organization offers numerous advantages that can significantly improve information management and operational efficiency. Here’s an expanded look at these benefits:

Enhanced accuracy

LLMs excel at understanding and generating human-like text, providing more precise and contextually relevant answers. This reduces the margin for error and ensures that users receive accurate information quickly. This means more reliable data for strategic decision-making and fewer resources spent on verifying information.

Scalability

LLM-powered knowledge bases can handle vast amounts of data from multiple sources, growing dynamically as your information expands. This scalability ensures that your workplace knowledge base remains up-to-date and comprehensive, capable of managing everything from routine queries to complex research tasks.

Time efficiency

Automating data ingestion and understanding significantly reduces the time and effort required for manual data entry and updates. This time-saving aspect allows your team to focus on more strategic tasks rather than being bogged down by the tedious and time-consuming data management process. It also means quicker onboarding and less downtime as the knowledge base can rapidly integrate new information.

Improved decision-making

Quick access to comprehensive and accurate information empowers tech leaders to make better-informed decisions. By leveraging the deep insights an LLM knowledge base provides, decision-makers can confidently navigate complex scenarios backed by reliable data. This leads to more effective strategies, optimized operations, and a stronger competitive edge.

User-friendly interface

The natural language capabilities of LLMs make interacting with the knowledge base intuitive and easy for users. Instead of navigating through complex databases or learning specific query languages, users can simply ask questions in natural language and receive accurate responses. This enhances user satisfaction and reduces the learning curve, encouraging wider organizational adoption.

Domain-specific customization

Through fine-tuning, LLMs can be adapted to specific domains, enhancing their ability to understand and generate domain-specific content. Whether your organization operates in finance, healthcare, technology, or any other sector, the LLM knowledge base can be tailored to meet your unique needs, providing highly relevant and specialized information.

Enhanced collaboration

With LLM-powered knowledge bases, information is easily accessible and shareable across the organization. This fosters better team collaboration, as they can effortlessly find and use shared knowledge. By breaking down information silos, LLM knowledge bases enable a more integrated and cohesive working environment.

Real-time insights

LLMs can process and analyze data in real-time, providing up-to-date insights that are crucial for dynamic decision-making environments. This real-time capability ensures that your organization always operates with the latest information, which is essential for maintaining agility in fast-paced industries.

Secure and controlled access

Using your LLM API key lets your organization maintain complete control over access permissions, logs, and restrictions. This security feature is particularly important for companies concerned about the potential risks associated with data privacy and compliance. With visibility into every interaction with the knowledge base, you can ensure that sensitive information is protected and accessed appropriately.

How to get started with an LLM knowledge base

Implementing an LLM-powered knowledge base may seem daunting, but with the right approach, it can be a seamless process:

- Assess your needs: Identify your organization’s specific information management challenges and how an LLM knowledge base can address them.

- Choose the right platform: Select a robust LLM knowledge base software that aligns with your needs. Consider factors like scalability, integration capabilities, and user-friendliness.

- Data preparation: Ensure your data is clean and well-organized. The better the quality of your training data, the more effective your knowledge base will be.

- Fine-tuning: Customize the LLM to understand and generate text specific to your domain. Fine-tuning involves training the model on domain-specific data to enhance its performance.

- Pilot program: Start with a pilot program to test the system’s capabilities and gather feedback.

- Training and adoption: Train your team on using the new system and encourage adoption by demonstrating its value.

LLM knowledge base software: GoSearch Enterprise Search

GoSearch is an LLM-powered knowledge base software that offers robust features designed to meet the needs of modern enterprises. With GoSearch, organizations can seamlessly integrate their workplace apps and data sources, leverage advanced NLP capabilities, and ensure their knowledge base evolves in real-time with their information landscape.

Key features of GoSearch:

Bring Your Own LLM API Key

GoSearch allows organizations to bring their own LLM API key. Using your own API key grants you control over access, logs, and restrictions, providing full visibility into the interactions of your large language model. This is a great option for companies concerned about the security implications of using an LLM.

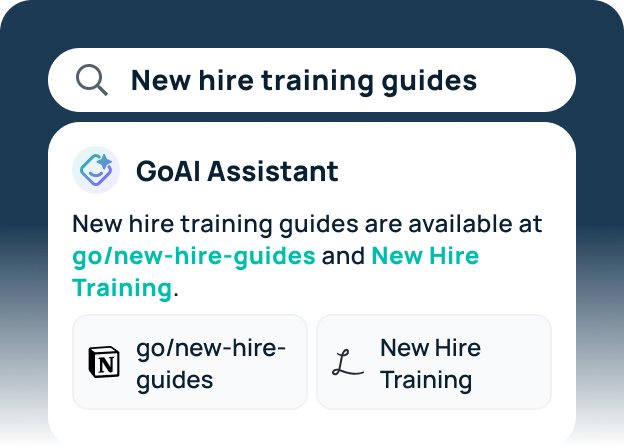

Generative AI Workplace Assistant

GoSearch supercharges your enterprise search experience with an AI assistant powered by GPT-4. Workplace resource recommendations and instant answers are just a chat away, enhancing productivity and efficiency.

AI-Powered Enterprise Search

GoSearch AI leverages your connectors like Google Docs, Notion, Jira, Confluence, and more to filter and display the most relevant information, resources, and people according to your search. This ensures that you always have access to the most pertinent information.

Secure Enterprise Search Experience

GoAI only searches and references apps you’ve connected to GoSearch. Likewise, users are never given access to restricted information, ensuring that your data remains secure.

Personalized Recommendations

Initiate a chat with GoAI to get personalized suggestions tailored to your specific needs and queries, improving the relevance and accuracy of search results.

Power your knowledge base with AI

Adopting an LLM knowledge base can significantly enhance how your organization manages and utilizes information. With benefits like improved accuracy, scalability, and time efficiency, this technology is a game-changer.

Explore how GoSearch can help your organization harness the power of LLM knowledge management to improve productivity and efficiency.

Search across all your apps for instant AI answers with GoSearch

Schedule a demo

![The Advantage of LLM Knowledge Bases [benefits + software]](https://images.gosearch.ai/blog/content/uploads/2024/05/05184230/gosearch-llm-knowledge-base-article%402x-670x361.png)