Retrieving the right information quickly is essential for productivity and innovation. Yet many organizations still struggle with inefficient search tools that rely on outdated keyword-based methods. Enter large language models (LLMs): advanced AI systems designed to understand context, semantics, and intent. When integrated into enterprise search platforms, LLMs and LLM knowledge bases can revolutionize information retrieval by delivering more accurate, relevant, and personalized results.

For companies to justify their investment in LLM-powered search solutions like GoSearch, it’s essential to measure the return on investment (ROI). In this post, we’ll explore the fundamental ways LLMs improve enterprise search, discuss the metrics to track ROI, and provide strategies for unlocking their full potential across your organization.

How LLMs Add Value to Enterprise Search

Traditional enterprise search engines rely heavily on keyword matching. As a result, they often surface irrelevant results or struggle to connect related content stored across multiple systems. LLM-powered search solutions overcome these limitations by:

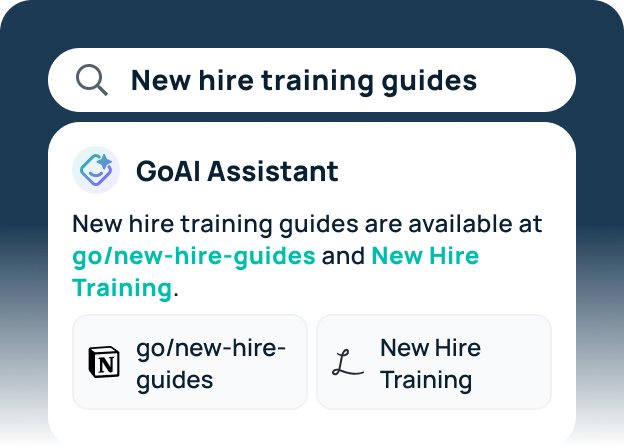

- Understanding Context and Intent: LLM knowledge bases can grasp the meaning behind search queries, even if users phrase them conversationally or vaguely. For example, a user asking, “How do I submit an expense report?” will receive relevant HR policy documents and step-by-step instructions, even if the exact keywords aren’t present in the query.

- Semantic Search for Relevant Results: LLMs use semantic search to retrieve information based on meaning and intent instead of matching keywords alone. This ensures that users find the most relevant documents, regardless of variations in terminology (e.g., “annual leave” vs. “paid time off”).

- Personalized and Context-Aware Search: LLM-powered platforms can tailor search results based on a user’s role, department, or recent activity. For example, an HR professional searching for “benefit updates” will receive HR-relevant results, while an employee from IT may get access to technical infrastructure updates on the same topic.

- Increased Efficiency Through Summarization: Besides retrieving documents, LLMs can generate summaries or highlight critical sections, allowing employees to quickly digest information without opening and reading underlying documents.

- Unified Search Across Multiple Systems: Enterprises today store data across many distinct platforms, including SharePoint, Slack, email, and internal databases. LLM-powered search can seamlessly integrate these sources, making cross-platform data retrieval effortless.

Key Metrics to Track the ROI of LLM-Powered Enterprise Search

Measuring ROI involves understanding both tangible and intangible benefits. Here are the critical metrics organizations should track to quantify the value of LLMs in enterprise search:

1. Search Success Rate

- Definition: The percentage of search queries that yield relevant results on the first attempt.

- Impact: A higher search success rate translates directly to time savings, as employees find what they need faster without surfacing irrelevant content.

2. Time Saved Per Search

- Definition: The reduction in time spent on information retrieval compared to previous search methods.

- Impact: Cumulative productivity gains can be significant if employees save even five minutes per search, multiplied across hundreds of employees and searches per week.

3. User Adoption Rate

- Definition: The percentage of employees actively using the new search platform.

- Impact: High adoption rates indicate that the solution is user-friendly and delivers value. Tracking this metric helps assess the rollout’s success and identify areas for improvement.

4. Reduced Dependence on IT or HR Support

- Definition: The decrease in the volume of queries submitted to IT or HR support teams.

- Impact: With LLM-powered self-service search, employees can resolve issues independently, easing the burden on support teams and reducing response times.

5. Employee Satisfaction and Engagement

- Definition: Feedback scores from employees on their experience using the search tool.

- Impact: Employees who can easily access the information they need feel more empowered and engaged, directly contributing to overall job satisfaction.

6. Faster Decision-Making

- Definition: The time reduction in gathering insights for business decisions.

- Impact: When teams can quickly find relevant data, they make better-informed decisions faster, driving innovation and responsiveness.

7. Reduced Duplicate Work

- Definition: The decline in repeated tasks or redundant projects caused by employees not finding relevant information.

- Impact: An efficient search tool prevents duplication of effort, ensuring resources are used more effectively.

Strategies for Maximizing ROI with LLM-Powered Search

To unlock the full potential of LLM-based search solutions, organizations must take steps to ensure smooth implementation and widespread adoption.

- Training and Onboarding: Ensure employees understand how to use the new search tool effectively. Provide training sessions and demos to showcase the platform’s capabilities, such as conversational search and summarization features.

- Continuous Learning and Optimization: AI models need regular updates to stay relevant. Incorporate feedback loops to fine-tune search algorithms, ensuring the platform evolves with changing business needs and language trends.

- Integrating with Core Systems: Maximize the value of LLM-powered search with all major knowledge repositories—emails, CRMs, HR platforms, and project management tools. This will ensure users get a true unified search experience.

- Monitor and Analyze Usage Patterns: Use analytics to track search queries, trends, and gaps in information retrieval. These insights can guide the creation of new content or the restructuring of existing knowledge bases.

A New Era of Enterprise Search with Measurable Impact

Investing in LLM-powered enterprise search tools like GoSearch can yield significant returns, improving productivity, decision-making, and employee satisfaction. To gain an accurate measure of success, it’s crucial to use concrete metrics such as search success rates, time saved, and user adoption.

With thoughtful implementation and continuous optimization, LLM-powered search tools can become a central pillar of digital transformation, enabling organizations to unlock hidden knowledge and empower employees. By tracking the right metrics, companies can confidently demonstrate the ROI of these AI-driven solutions, ensuring long-term success and competitive advantage.

Now is the time for businesses to rethink their search strategies and embrace the power of LLMs. In a world where time and information are everything, fast and accurate access to knowledge isn’t just a nice to have — it’s essential.

GoSearch: Transforming Enterprise Search with AI-Powered Insights

GoSearch is an advanced enterprise search solution powered by large language models (LLMs) designed to help organizations unlock knowledge, boost productivity, and improve decision-making. With GoSearch, employees can retrieve relevant information across multiple systems—emails, CRMs, HR platforms, and more—using natural language queries.

GoSearch’s semantic search, personalized results, and built-in AI summarization tools ensure that users get accurate answers faster. Whether it’s finding policy documents, project files, or business insights, GoSearch makes information retrieval intuitive and effortless.Ready to see GoSearch in action? Request your demo today and discover how AI-powered search can transform your organization.

Search across all your apps for instant AI answers with GoSearch

Schedule a demo

FAQs

1. What is an LLM-powered knowledge base search?

An LLM (Large Language Model)-powered knowledge base search uses advanced AI to understand and retrieve relevant information from structured and unstructured data sources. Unlike traditional search tools that rely on exact keyword matches, LLMs interpret context, semantics, and intent, allowing users to get precise answers, even with vague or conversational queries.

2. How does LLM search improve knowledge retrieval compared to traditional search tools?

LLM-powered search provides several improvements over keyword-based search:

- Context-aware results: It understands the meaning behind queries, not just individual words.

- Semantic search: Connects related concepts even if different terminology is used (e.g., “remote work” vs. “telecommuting”).

- Summarization: Long documents are condensed into easy-to-read summaries or key points.

- Conversational interaction: Users can engage with the system as if chatting with a virtual assistant.

3. What types of data can an LLM knowledge base search handle?

LLMs excel at handling both structured and unstructured data. This includes emails, meeting notes, PDFs, spreadsheets, databases, CRM records, and internal documents. LLM-based search solutions can connect to multiple repositories, creating a unified knowledge hub that breaks down silos between departments and systems.

4. How can LLMs improve employee productivity and knowledge sharing?

LLM-powered search enhances productivity by reducing the time spent searching for information. Employees can find what they need quickly, without sifting through irrelevant results. It also fosters better knowledge sharing across departments by surfacing related content from different sources, promoting collaboration and reducing duplicated work.

5. What are the challenges of using LLMs in knowledge base search, and how can they be addressed?

While LLMs offer many benefits, some challenges include:

- Bias and accuracy: AI models can sometimes generate incorrect or biased results, requiring ongoing monitoring and fine-tuning.

- Data privacy: Integrating with sensitive data requires robust security protocols and role-based access control.

- Scalability: Regular updates are needed to align the model with evolving content and terminology.

Organizations can address these challenges by establishing governance frameworks, monitoring usage patterns, and ensuring continuous optimization through feedback loops.